Monitoring and Alerting

Introduction

This section will provide the steps to install Prometheus, Grafana, Nginx and Certbot for monitoring your node server plus provide a means to send alerts using Telegram and PagerDuty. The Prometheus steps are once again taken from Digital Ocean's guide here, Grafana steps here, Blackbox Exporter steps here, Nginx steps here and finally the Certbot/Let's encrypt guide here.

NGINX

Before we install Prometheus we will need to install NGINX to serve the HTTP traffic.

sudo apt update

sudo apt install nginxBefore testing Nginx, the firewall software needs to be adjusted to allow access to the service. Nginx registers itself as a service with ufw upon installation, making it straightforward to allow Nginx access.

List the application configurations that ufw knows how to work with by typing:

sudo ufw app listYou should get a listing of the application profiles:

Available applications:

Nginx Full

Nginx HTTP

Nginx HTTPS

OpenSSHAs demonstrated by the output, there are three profiles available for Nginx:

Nginx Full: This profile opens both port 80 (normal, unencrypted web traffic) and port 443 (TLS/SSL encrypted traffic)

Nginx HTTP: This profile opens only port 80 (normal, unencrypted web traffic)

Nginx HTTPS: This profile opens only port 443 (TLS/SSL encrypted traffic)

It is recommended that you enable the most restrictive profile that will still allow the traffic you’ve configured. We will choose 'Full' to begin with. Once Testing is fully complete you may want to restrict this further by changing to 'HTTPS' and deleting 'Full'

You can enable this by typing:

You can verify the change by typing:

The output will indicate which traffic is allowed:

Prometheus

For security purposes, we’ll begin by creating the Prometheus user account, prometheus. We’ll use this account throughout the tutorial to isolate the ownership on Prometheus’ core files and directories.

Create these user, and use the --no-create-home and --shell /bin/false options so that these users can’t log into the server.

Before we download the Prometheus binaries, create the necessary directories for storing Prometheus’ files and data. Following standard Linux conventions, we’ll create a directory in /etc for Prometheus’ configuration files and a directory in /var/lib for its data.

Now, set the user and group ownership on the new directories to the prometheus user.

With our user and directories in place, we can now download Prometheus and then create the minimal configuration file to run Prometheus for the first time.

Download Prometheus

First, download and unpack the current stable version of Prometheus into your home directory. You can find the latest binaries along with their checksums on the Prometheus download page.

Next, use the sha256sum command to generate a checksum of the downloaded file:

Compare the output from this command with the checksum on the Prometheus download page to ensure that your file is both genuine and not corrupted.

Now, unpack the downloaded archive.

This will create a directory called prometheus-2.28.1.linux-amd64 containing two binary files (prometheus and promtool), consoles and console_libraries directories containing the web interface files, a license, a notice, and several example files.

Copy the two binaries to the /usr/local/bin directory.

Set the user and group ownership on the binaries to the prometheus user created in Step 1.

Copy the consoles and console_libraries directories to /etc/prometheus.

Set the user and group ownership on the directories to the prometheus user. Using the -R flag will ensure that ownership is set on the files inside the directory as well.

Lastly, remove the leftover files from your home directory as they are no longer needed.

Now that Prometheus is installed, we’ll create its configuration and service files in preparation of its first run.

Configure Prometheus

In the /etc/prometheus directory, use nano or your favorite text editor to create a configuration file named prometheus.yml. For now, this file will contain just enough information to run Prometheus for the first time.

Warning: Prometheus’ configuration file uses the YAML format, which strictly forbids tabs and requires two spaces for indentation. Prometheus will fail to start if the configuration file is incorrectly formatted.

In the global settings, define the default interval for scraping metrics. Note that Prometheus will apply these settings to every exporter unless an individual exporter’s own settings override the globals.

This scrape_interval value tells Prometheus to collect metrics from its exporters every 15 seconds, which is long enough for most exporters.

Now, add Prometheus itself to the list of exporters to scrape from with the following scrape_configs directive:

Prometheus config file part 2 - /etc/prometheus/prometheus.yml

Prometheus uses the job_name to label exporters in queries and on graphs, so be sure to pick something descriptive here.

And, as Prometheus exports important data about itself that you can use for monitoring performance and debugging, we’ve overridden the global scrape_interval directive from 15 seconds to 5 seconds for more frequent updates.

Lastly, Prometheus uses the static_configs and targets directives to determine where exporters are running. Since this particular exporter is running on the same server as Prometheus itself, we can use localhost instead of an IP address along with the default port, 9090.

Your configuration file should now look like this:

Save the file and exit your text editor.

Now, set the user and group ownership on the configuration file to the prometheus user created in Step 1.

With the configuration complete, we’re ready to test Prometheus by running it for the first time.

Starting Prometheus

Start up Prometheus as the prometheus user, providing the path to both the configuration file and the data directory.

The output contains information about Prometheus’ loading progress, configuration file, and related services. It also confirms that Prometheus is listening on port 9090.

If you get an error message, double-check that you’ve used YAML syntax in your configuration file and then follow the on-screen instructions to resolve the problem.

Now, halt Prometheus by pressing CTRL+C, and then open a new systemd service file.

The service file tells systemd to run Prometheus as the prometheus user, with the configuration file located in the /etc/prometheus/prometheus.yml directory and to store its data in the /var/lib/prometheus directory. (The details of systemd service files are beyond the scope of this tutorial, but you can learn more at Understanding Systemd Units and Unit Files.)

Copy the following content into the file:

Prometheus service file - /etc/systemd/system/prometheus.service

Finally, save the file and close your text editor.

To use the newly created service, reload systemd.

You can now start Prometheus using the following command:

To make sure Prometheus is running, check the service’s status.

The output tells you Prometheus’ status, main process identifier (PID), memory use, and more.

If the service’s status isn’t active, follow the on-screen instructions and re-trace the preceding steps to resolve the problem before continuing the tutorial.

When you’re ready to move on, press Q to quit the status command.

Lastly, enable the service to start on boot.

Now that Prometheus is up and running, we can install an additional exporter to generate metrics about our server’s resources.

Configure Prometheus to Scrape Node Exporter on the Node Server

Because Prometheus only scrapes exporters which are defined in the scrape_configs portion of its configuration file, we’ll need to add an entry for Node Exporter, just like we did for Prometheus itself.

Before we do that however we need to open the firewall on the Node server to allow connections from the Monitoring server.

On the Node Server:

Note: you may also need to open these ports within your AWS security groups

Open the configuration file.

At the end of the scrape_configs block, add a new entry called node_exporter.

Prometheus config file part 1 - /etc/prometheus/prometheus.yml

Because Node Exporter is running on the Node server , we need to add in <YOUR_NODE_SERVER_IP> with Node Exporter’s default port, 9100.

Your whole configuration file should look like this:

Prometheus config file - /etc/prometheus/prometheus.yml

Save the file and exit your text editor when you’re ready to continue.

Finally, restart Prometheus to put the changes into effect.

Once again, verify that everything is running correctly with the status command.

If the service’s status isn’t set to active, follow the on screen instructions and re-trace your previous steps before moving on.

We now have Prometheus installed, configured, and running. As a final precaution before connecting to the web interface, we’ll enhance our installation’s security with basic HTTP authentication to ensure that unauthorized users can’t access our metrics.

Securing Prometheus

Prometheus does not include built-in authentication or any other general purpose security mechanism. On the one hand, this means you’re getting a highly flexible system with fewer configuration restraints; on the other hand, it means it’s up to you to ensure that your metrics and overall setup are sufficiently secure.

For simplicity’s sake, we’ll use Nginx to add basic HTTP authentication to our installation, which both Prometheus and its preferred data visualization tool, Grafana, fully support.

Start by installing apache2-utils, which will give you access to the htpasswd utility for generating password files.

Now, create a password file by telling htpasswd where you want to store the file and which username <username> you’d like to use for authentication.

Note: htpasswd will prompt you to enter and re-confirm the password you’d like to associate with this user. Also, make note of both the username and password you enter here, as you’ll need them to log into Prometheus in Step 9.

The result of this command is a newly-created file called .htpasswd, located in the /etc/nginx directory, containing the username and a hashed version of the password you entered.

Next, configure Nginx to use the newly-created passwords.

First, make a Prometheus-specific copy of the default Nginx configuration file so that you can revert back to the defaults later if you run into a problem.

Then, open the new configuration file.

Locate the location / block under the server block. It should look like:/etc/nginx/sites-available/default

As we will be forwarding all traffic to Prometheus, replace the try_files directive with the following content:

These settings ensure that users will have to authenticate at the start of each new session. Additionally, the reverse proxy will direct all requests handled by this block to Prometheus.

When you’re finished making changes, save the file and close your text editor.

Now, deactivate the default Nginx configuration file by removing the link to it in the /etc/nginx/sites-enabled directory, and activate the new configuration file by creating a link to it.

Before restarting Nginx, check the configuration for errors using the following command:

The output should indicate that the syntax is ok and the test is successful. If you receive an error message, follow the on-screen instructions to fix the problem before proceeding to the next step.

Output of Nginx configuration tests:

Then, reload Nginx to incorporate all of the changes.

Verify that Nginx is up and running.

If your output doesn’t indicate that the service’s status is active, follow the on-screen messages and re-trace the preceding steps to resolve the issue before continuing.

At this point, we have a fully-functional and secured Prometheus server, so we can log into the web interface to begin looking at metrics.

Testing Prometheus

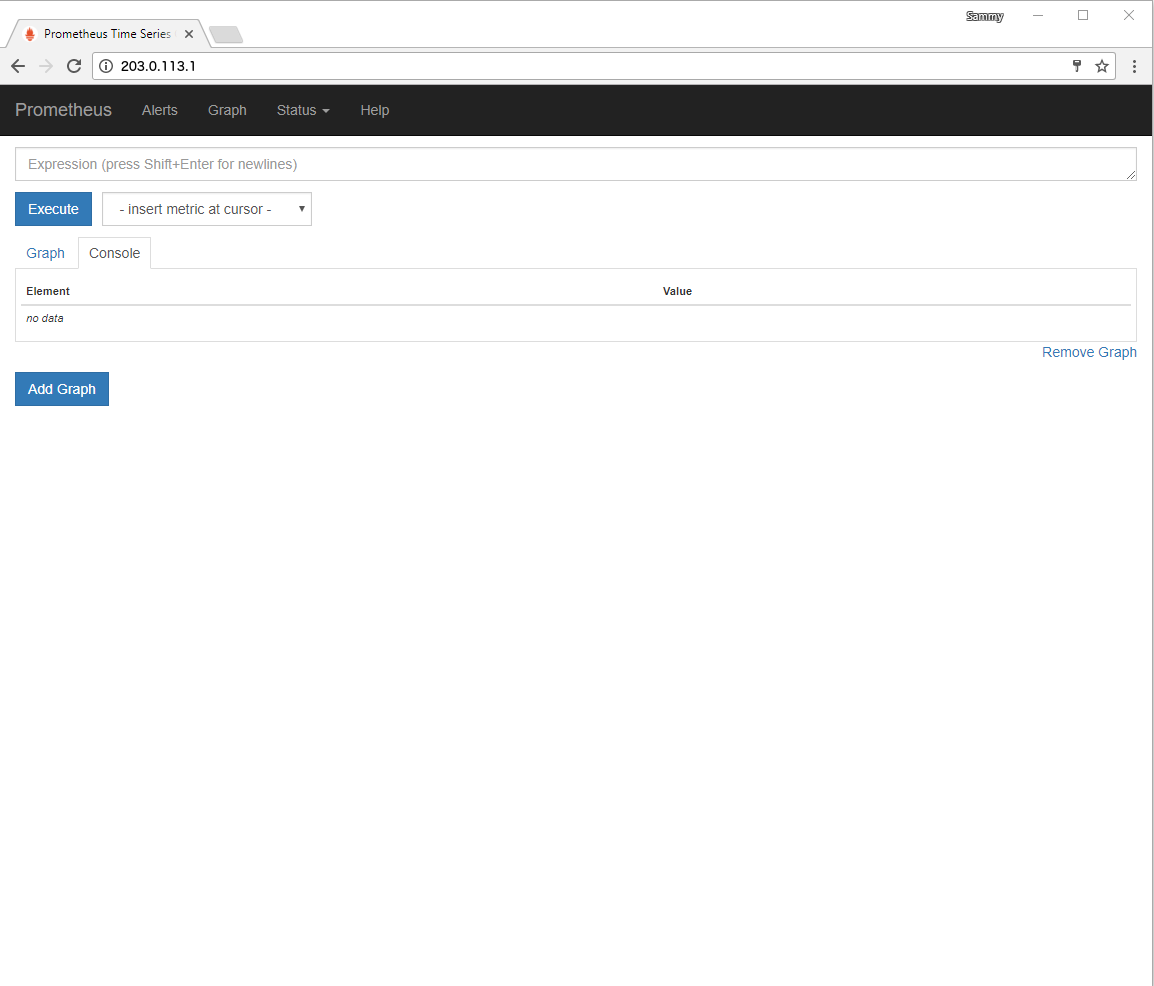

Prometheus provides a basic web interface for monitoring the status of itself and its exporters, executing queries, and generating graphs. But, due to the interface’s simplicity, the Prometheus team recommends installing and using Grafana for anything more complicated than testing and debugging.

In this tutorial, we’ll use the built-in web interface to ensure that Prometheus and Node Exporter are up and running before moving on to install Blackbox Exporter and Grafana.

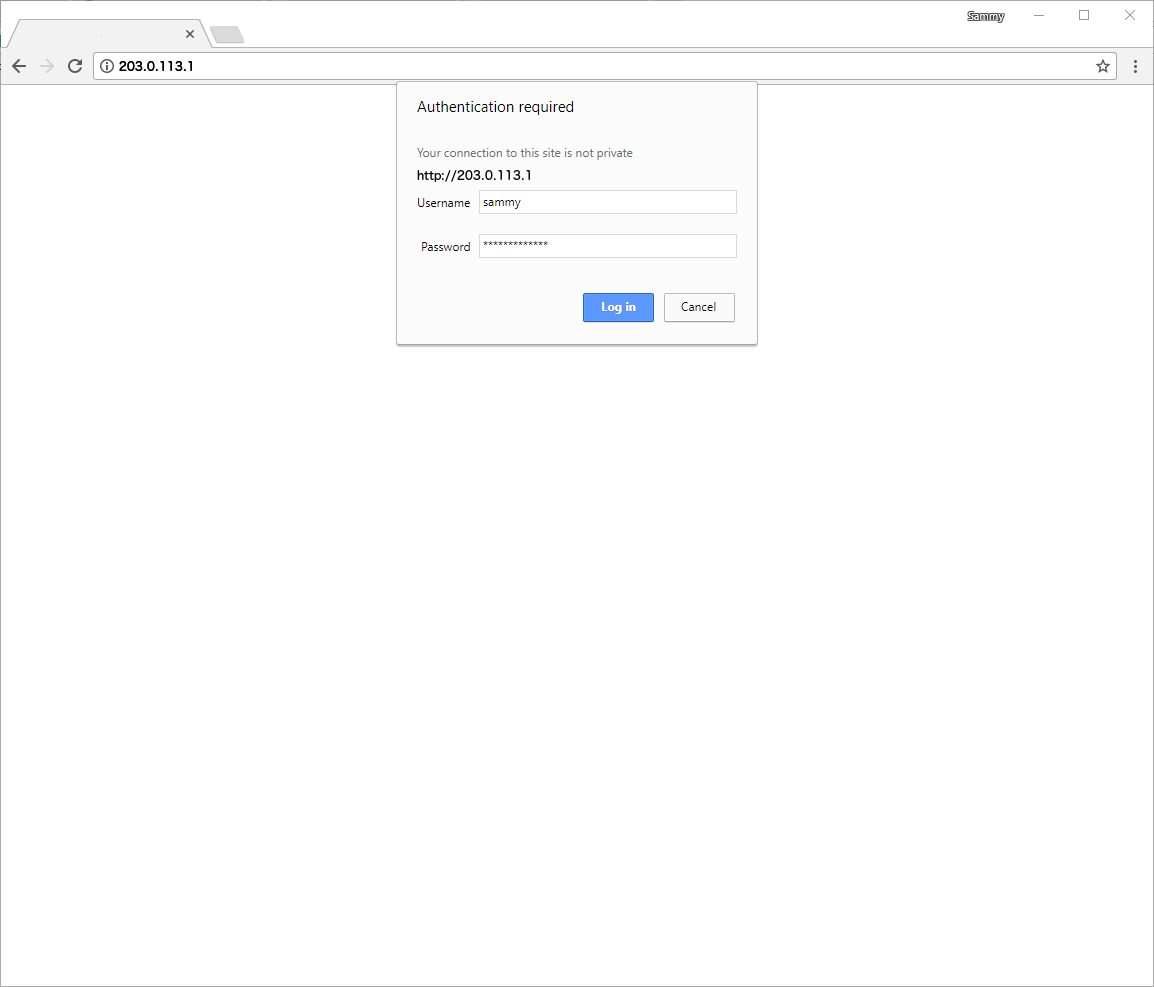

To begin, point your web browser to http://your_server_ip.

In the HTTP authentication dialogue box, enter the username and password you chose earlier.

Once logged in, you’ll see the Expression Browser, where you can execute and visualize custom queries.

Before executing any expressions, verify the status of both Prometheus and Node Explorer by clicking first on the Status menu at the top of the screen and then on the Targets menu option. As we have configured Prometheus to scrape both itself and Node Exporter, you should see both targets listed in the UP state.

If either exporter is missing or displays an error message, check the service’s status with the following commands:

The output for both services should report a status of Active: active (running). If a service either isn’t active at all or is active but still not working correctly, follow the on-screen instructions and re-trace the previous steps before continuing.

Installing Blackbox

The Blackbox exporter enables blackbox probing of endpoints over HTTP, HTTPS, DNS, TCP and ICMP. We can use it for checking the uptime status of both the Node and Monitoring servers.

Create a Service User

For security purposes, we’ll create a blackbox_exporter user account. We’ll use this account throughout the tutorial to run Blackbox Exporter and to isolate the ownership on appropriate core files and directories. This ensures Blackbox Exporter can't access and modify data it doesn't own.

Create these user with the useradd command using the --no-create-home and --shell /bin/false flags so that these users can’t log into the server:

With the users in place, let’s download and configure Blackbox Exporter.

Installing Blackbox Exporter

First, download the latest stable version of Blackbox Exporter to your home directory. You can find the latest binaries along with their checksums on the Prometheus Download page.

Before unpacking the archive, verify the file’s checksums using the following sha256sum command:

Compare the output from this command with the checksum on the Prometheus download page to ensure that your file is both genuine and not corrupted:

If the checksums don’t match, remove the downloaded file and repeat the preceding steps to re-download the file.

When you’re sure the checksums match, unpack the archive:

This creates a directory called blackbox_exporter-0.19.0.linux-amd64, containing the blackbox_exporter binary file, a license, and example files.

Copy the binary file to the /usr/local/bin directory.

Set the user and group ownership on the binary to the blackbox_exporter user, ensuring non-root users can’t modify or replace the file:

Lastly, we’ll remove the archive and unpacked directory, as they’re no longer needed.

Next, let’s configure Blackbox Exporter to probe endpoints over the HTTP protocol and then run it.

Configuring and Running Blackbox Exporter

Let’s create a configuration file defining how Blackbox Exporter should check endpoints. We’ll also create a systemd unit file so we can manage Blackbox’s service using systemd.

We’ll specify the list of endpoints to probe in the Prometheus configuration in the next step.

First, create the directory for Blackbox Exporter’s configuration. Per Linux conventions, configuration files go in the /etc directory, so we’ll use this directory to hold the Blackbox Exporter configuration file as well:

Then set the ownership of this directory to the blackbox_exporter user you created in Step 1:

In the newly-created directory, create the blackbox.yml file which will hold the Blackbox Exporter configuration settings:

We’ll configure Blackbox Exporter to use the default http prober to probe endpoints. Probers define how Blackbox Exporter checks if an endpoint is running. The http prober checks endpoints by sending a HTTP request to the endpoint and testing its response code. You can select which HTTP method to use for probing, as well as which status codes to accept as successful responses. Other popular probers include the tcp prober for probing via the TCP protocol, the icmp prober for probing via the ICMP protocol and the dns prober for checking DNS entries.

For this tutorial, we’ll use the http prober to probe the endpoint running on port 8080 over the HTTP GET method. By default, the prober assumes that valid status codes in the 2xx range are valid, so we don’t need to provide a list of valid status codes.

We’ll configure a timeout of 5 seconds, which means Blackbox Exporter will wait 5 seconds for the response before reporting a failure. Depending on your application type, choose any value that matches your needs.

Note: Blackbox Exporter’s configuration file uses the YAML format, which forbids using tabs and strictly requires using two spaces for indentation. If the configuration file is formatted incorrectly, Blackbox Exporter will fail to start up.

Add the following configuration to the file:

/etc/blackbox_exporter/blackbox.yml

You can find more information about the configuration options in the the Blackbox Exporter’s documentation.

Save the file and exit your text editor.

Before you create the service file, set the user and group ownership on the configuration file to the blackbox_exporter user created in Step 1.

Now create the service file so you can manage Blackbox Exporter using systemd:

Add the following content to the file:

/etc/systemd/system/blackbox_exporter.service

This service file tells systemd to run Blackbox Exporter as the blackbox_exporter user with the configuration file located at /etc/blackbox_exporter/blackbox.yml. The details of systemd service files are beyond the scope of this tutorial, but if you’d like to learn more see the Understanding Systemd Units and Unit Files tutorial.

Save the file and exit your text editor.

Finally, reload systemd to use your newly-created service file:

Now start Blackbox Exporter:

Make sure it started successfully by checking the service’s status:

The output contains information about Blackbox Exporter’s process, including the main process identifier (PID), memory use, logs and more.

If the service’s status isn’t active (running), follow the on-screen logs and retrace the preceding steps to resolve the problem before continuing the tutorial.

Lastly, enable the service to make sure Blackbox Exporter will start when the server restarts:

Now that Blackbox Exporter is fully configured and running, we can configure Prometheus to collect metrics about probing requests to our endpoint, so we can create alerts based on those metrics and set up notifications for alerts using Alertmanager.

Configuring Prometheus To Scrape Blackbox Exporter

As mentioned in Step 3, the list of endpoints to be probed is located in the Prometheus configuration file as part of the Blackbox Exporter’s targets directive. In this step you’ll configure Prometheus to use Blackbox Exporter to scrape the Nginx web server running on port 80 that you configured in the prerequisite tutorials.

Open the Prometheus configuration file in your editor:

At this point, it should look like the following:

/etc/prometheus/prometheus.yml

At the end of the scrape_configs directive, add the following entry, which will tell Prometheus to probe the endpoint running on the local port 80 using the Blackbox Exporter’s module http_2xx, configured in Step 3.

/etc/prometheus/prometheus.yml

By default, Blackbox Exporter runs on port 9115 with metrics available on the /probe endpoint.

The scrape_configs configuration for Blackbox Exporter differs from the configuration for other exporters. The most notable difference is the targets directive, which lists the endpoints being probed instead of the exporter’s address. The exporter’s address is specified using the appropriate set of __address__ labels.

You’ll find a detailed explanation of the relabel directives in the Prometheus documentation.

Your Prometheus configuration file will now look like this:Prometheus config file - /etc/prometheus/prometheus.yml

Save the file and close your text editor.

Restart Prometheus to put the changes into effect:

Make sure it’s running as expected by checking the Prometheus service status:

If the service’s status isn’t active (running), follow the on-screen logs and retrace the preceding steps to resolve the problem before continuing the tutorial.

At this point, you’ve configured Prometheus to scrape metrics from Blackbox Exporter.

Installing Grafana

Grafana is an open-source data visualization and monitoring tool that we will integrate with Prometheus to provide a graphical representation of the data being pulled from the Node Server.

It will require the following:

A registered Domain name from a Domain Registrar.

An A record with

your_domainpointing to your server’s public IP address.An A record with

www.your_domainpointing to your server’s public IP address.Nginx installed and configured

Installation of a Let's Encrypt SSL certificate with Certbot

Ensure port 443 is open for the Monitoring server within your AWS security groups

You may also need to open port 80 within your AWS Security groups temporarily until SSL has been configured and port 443 is available

Complete Nginx Configuration

The first step is to install Nginx which we have partially completed prior to installing Prometheus. We will pick up where we left off. These steps are taken from Digital Ocean's Nginx guide here.

We can check with the systemd init system to make sure the service is running by typing:

You should receive the following showing the service is active

Setting Up Server Blocks (Recommended)

When using the Nginx web server, server blocks (similar to virtual hosts in Apache) can be used to encapsulate configuration details and host more than one domain from a single server. We will set up a domain called your_domain, but you should replace this with your own domain name.

Nginx on Ubuntu 20.04 has one server block enabled by default that is configured to serve documents out of a directory at /var/www/html. While this works well for a single site, it can become unwieldy if you are hosting multiple sites. Instead of modifying /var/www/html, let’s create a directory structure within /var/www for our your_domain site, leaving /var/www/html in place as the default directory to be served if a client request doesn’t match any other sites.

Create the directory for your_domain as follows, using the -p flag to create any necessary parent directories:

Next, assign ownership of the directory with the $USER environment variable:

The permissions of your web roots should be correct if you haven’t modified your umask value, which sets default file permissions. To ensure that your permissions are correct and allow the owner to read, write, and execute the files while granting only read and execute permissions to groups and others, you can input the following command:

Next, create a sample index.html page using nano or your favorite editor:

Inside, add the following sample HTML:/var/www/your_domain/html/index.html

Save and close the file by typing CTRL and X then Y and ENTER when you are finished.

In order for Nginx to serve this content, it’s necessary to create a server block with the correct directives. Instead of modifying the default configuration file directly, let’s make a new one at /etc/nginx/sites-available/your_domain:

Paste in the following configuration block, which is similar to the default, but updated for our new directory and domain name:/etc/nginx/sites-available/your_domain

Notice that we’ve updated the root configuration to our new directory, and the server_name to our domain name.

Next, let’s enable the file by creating a link from it to the sites-enabled directory, which Nginx reads from during startup:

Two server blocks are now enabled and configured to respond to requests based on their listen and server_name directives (you can read more about how Nginx processes these directives here):

your_domain: Will respond to requests foryour_domainandwww.your_domain.default: Will respond to any requests on port 80 that do not match the other two blocks.

To avoid a possible hash bucket memory problem that can arise from adding additional server names, it is necessary to adjust a single value in the /etc/nginx/nginx.conf file. Open the file:

Find the server_names_hash_bucket_size directive and remove the # symbol to uncomment the line. If you are using nano, you can quickly search for words in the file by pressing CTRL and w./etc/nginx/nginx.conf

Save and close the file when you are finished.

Next, test to make sure that there are no syntax errors in any of your Nginx files:

If there aren’t any problems, restart Nginx to enable your changes:

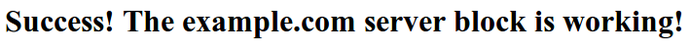

Nginx should now be serving your domain name. You can test this by navigating to http://your_domain, where you should see something like this:

Install Let's Encrypt SSL Certificates with Certbot

Let's Encrypt is a free SSL service that can be installed on Linux hosts as an easy way to secure websites. The installation steps are taken from Certbot's guide here.

Ensure your version of snapd is up-to-date

Install certbot

Execute the following instruction on the command line on the machine to ensure that the certbot command can be run.

Run this command to get a certificate and have Certbot edit your Nginx configuration automatically to serve it, turning on HTTPS access in a single step.

select both domains by entering 1,2

Choose to re-direct HTTP to HTTPS

Congrats! your certificate has been installed.

Optional: Test the certificate's strength using Qualys.

Note: The certificate will expire in three months and would normally auto-renew. You can test the auto-renewal process will work by entering the following command

You can test automatic renewal for your certificates by running this command:

Configuring Grafana

Download the Grafana GPG key with wget, then pipe the output to apt-key. This will add the key to your APT installation’s list of trusted keys, which will allow you to download and verify the GPG-signed Grafana package:

In this command, the option -q turns off the status update message for wget, and -O outputs the file that you downloaded to the terminal. These two options ensure that only the contents of the downloaded file are pipelined to apt-key.

Next, add the Grafana repository to your APT sources:

Refresh your APT cache to update your package lists:

You can now proceed with the installation:

Once Grafana is installed, use systemctl to start the Grafana server:

Next, verify that Grafana is running by checking the service’s status:

You will receive output similar to this:

This output contains information about Grafana’s process, including its status, Main Process Identifier (PID), and more. active (running) shows that the process is running correctly.

Lastly, enable the service to automatically start Grafana on boot:

You will receive the following output:

This confirms that systemd has created the necessary symbolic links to autostart Grafana.

Grafana is now installed and ready for use. Next, you wil secure your connection to Grafana with a reverse proxy and SSL certificate.

Setting Up the Reverse Proxy

Using an SSL certificate will ensure that your data is secure by encrypting the connection to and from Grafana. But, to make use of this connection, you’ll first need to reconfigure Nginx as a reverse proxy for Grafana.

Open the Nginx configuration file you created when you set up the Nginx server block with Let’s Encrypt in the Prerequisites. You can use any text editor, but for this tutorial we’ll use nano:

Locate the following block:/etc/nginx/sites-available/your_domain

Because you already configured Nginx to communicate over SSL and because all web traffic to your server already passes through Nginx, you just need to tell Nginx to forward all requests to Grafana, which runs on port 3000 by default.

Delete the existing try_files line in this location block and replace it with the following proxy_pass option:/etc/nginx/sites-available/your_domain

This will map the proxy to the appropriate port. Once you’re done, save and close the file by pressing CTRL+X, Y, and then ENTER if you’re using nano.

Now, test the new settings to make sure everything is configured correctly:

You will receive the following output:

Finally, activate the changes by reloading Nginx:

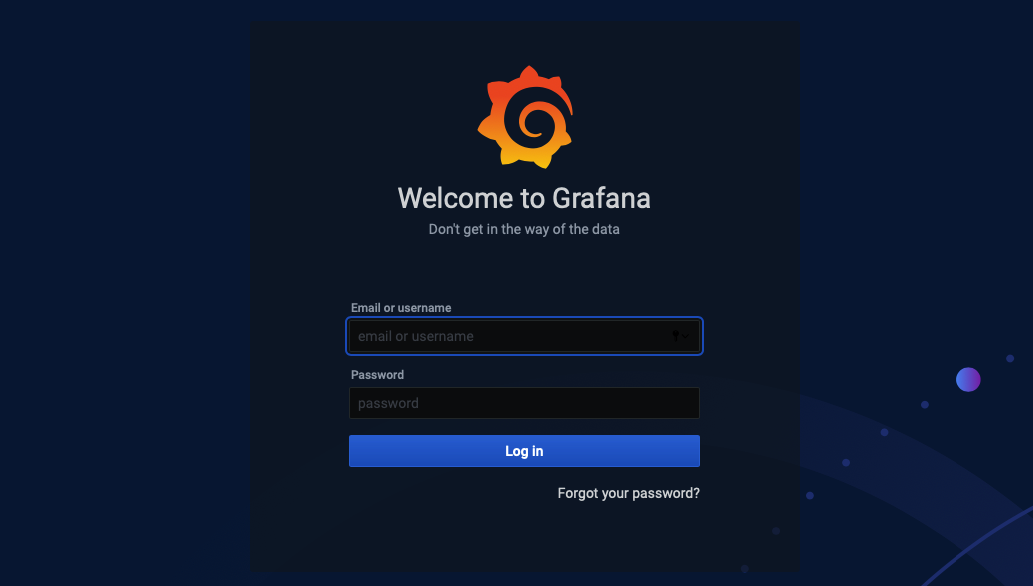

You can now access the default Grafana login screen by pointing your web browser to https://your_domain. If you’re unable to reach Grafana, verify that your firewall is set to allow traffic on port 443 and then re-trace the previous instructions.

With the connection to Grafana encrypted, you can now implement additional security measures, starting with changing Grafana’s default administrative credentials.

Updating Credentials

Because every Grafana installation uses the same administrative credentials by default, it is a best practice to change your login information as soon as possible. In this step, you’ll update the credentials to improve security.

Start by navigating to https://your_domain from your web browser. This will bring up the default login screen where you’ll see the Grafana logo, a form asking you to enter an Email or username and Password, a Log in button, and a Forgot your password? link.

Enter admin into both the Email or username and Password fields and then click on the Log in button.

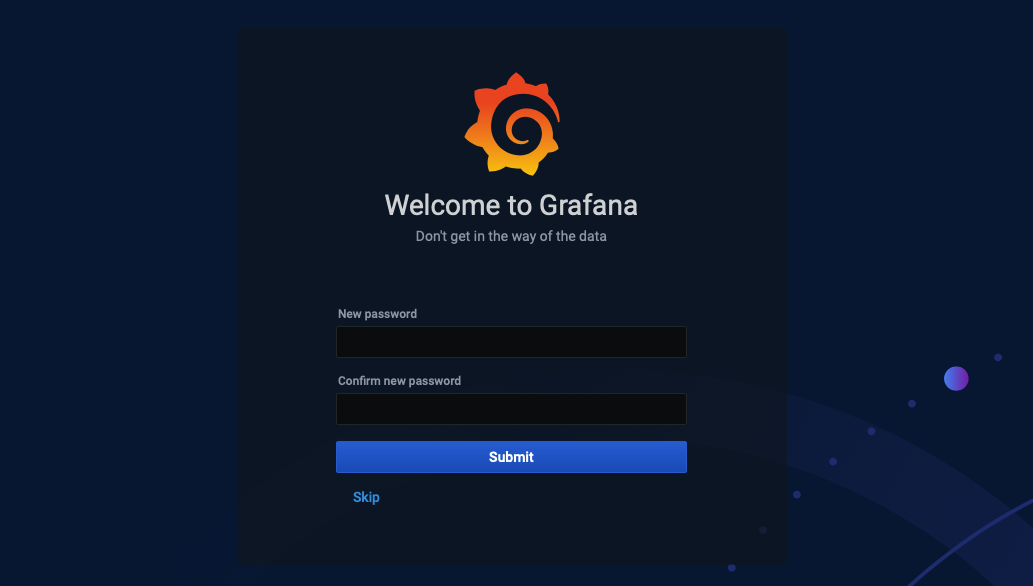

On the next screen, you’ll be asked to make your account more secure by changing the default password:

Enter the password you’d like to start using into the New password and Confirm new password fields.

From here, you can click Submit to save the new information or press Skip to skip this step. If you skip, you will be prompted to change the password next time you log in.

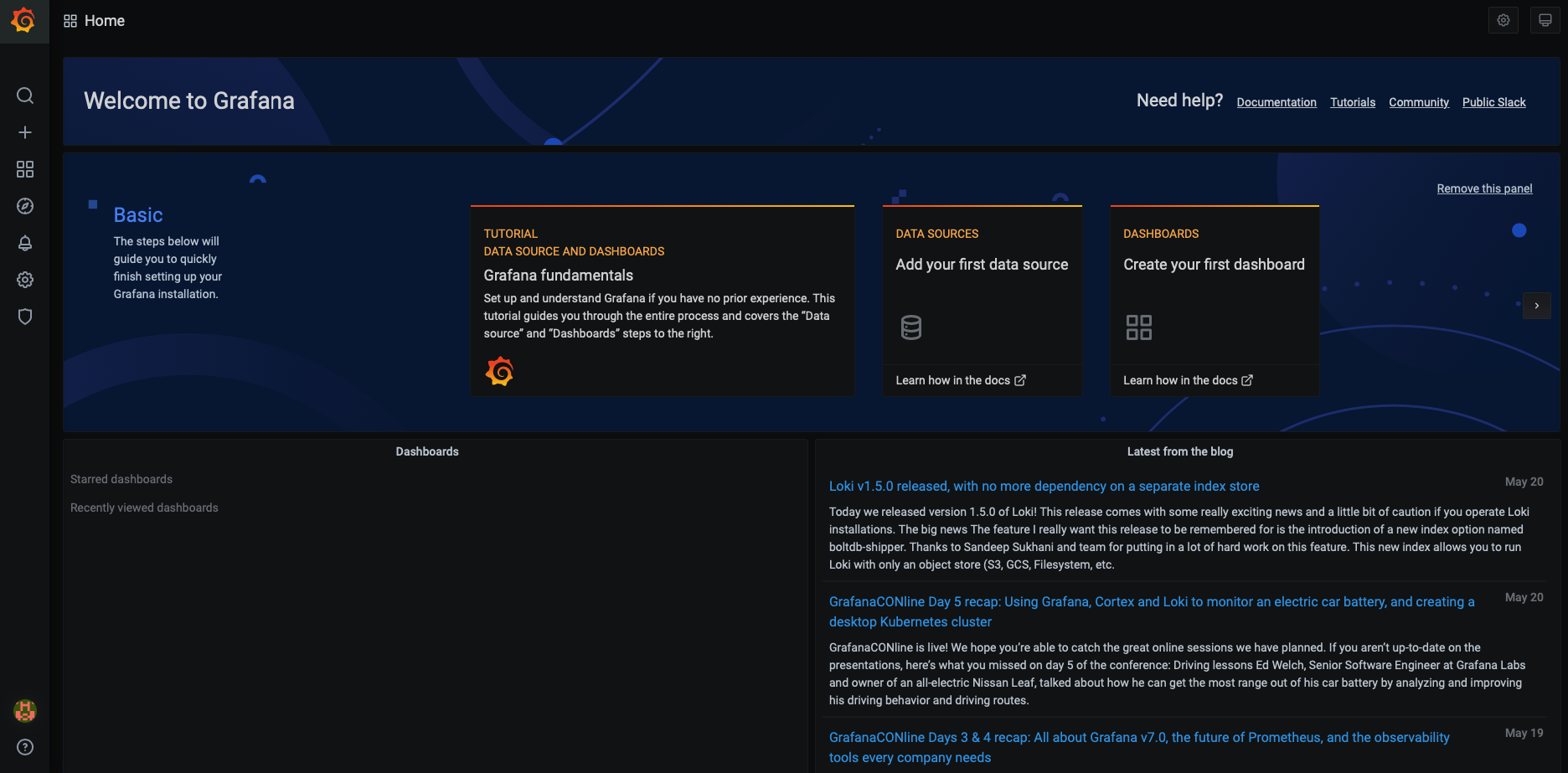

In order to increase the security of your Grafana setup, click Submit. You’ll go to the Welcome to Grafana dashboard:

You’ve now secured your account by changing the default credentials. Next, you will make changes to your Grafana configuration so that nobody can create a new Grafana account without your permission.

Disabling Grafana Registrations and Anonymous Access

Grafana provides options that allow visitors to create user accounts for themselves and preview dashboards without registering. When Grafana isn’t accessible via the internet or when it’s working with publicly available data like service statuses, you may want to allow these features. However, when using Grafana online to work with sensitive data, anonymous access could be a security problem. To fix this problem, make some changes to your Grafana configuration.

Start by opening Grafana’s main configuration file for editing:

Locate the following allow_sign_up directive under the [users] heading:/etc/grafana/grafana.ini

Enabling this directive with true adds a Sign Up button to the login screen, allowing users to register themselves and access Grafana.

Disabling this directive with false removes the Sign Up button and strengthens Grafana’s security and privacy.

Uncomment this directive by removing the ; at the beginning of the line and then setting the option to false:/etc/grafana/grafana.ini

Next, locate the following enabled directive under the [auth.anonymous] heading:/etc/grafana/grafana.ini

Setting enabled to true gives non-registered users access to your dashboards; setting this option to false limits dashboard access to registered users only.

Uncomment this directive by removing the ; at the beginning of the line and then setting the option to false./etc/grafana/grafana.ini

Save the file and exit your text editor.

To activate the changes, restart Grafana:

Verify that everything is working by checking Grafana’s service status:

Like before, the output will report that Grafana is active (running).

Now, point your web browser to https://your_domain. To return to the Sign Up screen, bring your cursor to your avatar in the lower left of the screen and click on the Sign out option that appears.

Once you have signed out, verify that there is no Sign Up button and that you can’t sign in without entering login credentials.

At this point, Grafana is fully configured and ready for use.

Add a Dashboard

We will add two dashboards

The default Grafana 'Node Exporter Full' dashboard and

The Radix team provided 'Radix Node' dashboard (optional)

Note: There are more dashboards available from the Grafana website

Configuring a Data Source

Before we import a dashboard we need to connect to our Prometheus data source. From the Grafana homepage, click the cog icon, then 'Data Sources' and select 'Prometheus'

Leave the HTTP URL as the default http://localhost:9090

Scroll to the bottom and click 'Save & Test'

Import Node Exporter Full Dashboard

The next step is to setup your Grafana dashboards

Each dashboard on the Grafana website has an id, the 'Node Exporter Full' dashboard has an id of 1860. From the main Grafana window, click on the '+' icon, followed by 'Import' and then enter the id 1860 and 'Load'.

All going well, you should start seeing data populate the dashboard.

Note: you man need to adjust the time range in the top right of the window to a few minutes until enough data has been collected.

Radix Node Dashboard (Optional)

The Radix Team have provided their own Grafana dashboard which provides individual node and network wide metrics. Because our Node Server runs in a Docker environment there are additional steps we need to perform so that Prometheus can scrape the data within the Docker container. Please head to the link below to configure this dashboard.

Configure Radix Node Dashboard on GrafanaAlerting

In this section we will be configuring alerts for two popular services

Telegram - a free chat app

PagerDuty - an enterprise level incident management service (with a free tier)

Telegram

We will configure Grafana to send alerts to a Telegram chat account

Create the Telegram bot

First thing to do is to search for 'Botfather' in Telegram.

Enter /start to see the list of available commands.

Enter /newbot to request a new bot account

Give your bot a name. It must end with 'bot'. eg. RadixMonitoring_bot

You will receive a response with your API token. Keep this secure and safe.

You will also need your Chat ID. Search for 'Chat ID Echo' in Telegram

Enter /start and you will receive your Chat ID in return.

Now head back to Grafana and on the main Grafana homepage head to the Alerting page, select 'Notification channels' Give your Alert a name eg. Telegram. Change the 'Type' to Telegram and enter in your API token and Chat ID.

Click Save and Test. All going well, you should receive an alert.

Additional Bot Security

Ok, we have set our bot, now we will add some security options. By default, our bot can be added to different groups, i.e. anyone can add it to a group which we don't really want.

In order to disable this configuration, we open the chat with the BotFather in Telegram and enter:

Select the name of your bot or the bot that you want to change this feature. After selecting it, this message appears:

As you can see the current status of this feature is ENABLED, we will choose the option Disable. The following message is displayed:

Create Grafana Alerts

By default the Grafana alerting functionality is not compatible with query variables. Both the Grafana Node Exporter and Radix Node Dashboard use variables so we will need to create copies of any panel we would like to receive alerts from and edit the query, replacing any variables with their actual values. In the example below we will use the Validator 'UP/DOWN' status panel from the Radix Node Dashboard and create a copy of this panel. We need to configure the panel with the following settings

The important bit is to edit the metrics field to specify your node IP

If we replace any instance variables with <NODE-SERVER-IP>:443 the Alert tab will appear and we can configure our alert.

Click the 'Alert' tab and

Give the rule a name

Set the conditions. For this rule we can use WHEN sum() is below 1

Configure the error handling as desired

Send the notifications to Telegram and/or PagerDuty

Include a message

Save the Dashboard

The first 'No Data' alert will be received almost instantaneously.

The 'Down' alert will take approx 10 mins to trigger due to Grafana going through two steps.

An Amber stage which will start 5 mins after the server goes offline, this will last for 5 mins.

Therefore 10 mins after the server goes offline a Red alert will trigger and send the notification.

If we bring the server down and wait for 10 mins we should see the following alert in Telegram. Bringing the server back up again will send the second alert to notify the server is now 'OK'

Following the same procedure similar alerts can be created for CPU, RAM, Disk, etc set to trigger an alert if they fall below or exceed a certain threshold. eg. when CPU or RAM is above 75% utilisation.

PagerDuty

Pagerduty is a cloud hosted incident management platform that integrates with many existing alerting and incident management services. They offer a free tier with limited functionality which we will use to begin with. The additional benefit with PagerDuty is they offer SMS, email and phone call notifications.

There are two key steps

Sign-up for an account

Register a new 'Service' and integrate with Grafana (using their Prometheus integration key). Their Prometheus Integration guide is here. Note: we only need the Integration key so we can copy that to the Grafana alerting section.

Once you have created a PagerDuty free tier account here create a new 'Service'.

Select the default 'Escalation Policy'

Select the default 'Intelligent' alert grouping

Search for 'Prometheus' at the integrations step.

Copy the 'Integration Key' and save it somewhere safe.

Now click on your Profile Avatar in the top right hand corner and select 'My Profile'. In the 'Contact Information' tab add your mobile number and/or an email address to receive SMS, Phone call and email alerts as desired.

Once complete, head back to Grafana and create another 'Notification Channel' for Pager Duty. Add your Integration Key, then save and test.

You should receive an alert via your chosen channels (SMS/Phone/Email) and your PagerDuty Incident dashboard should also update.

That's it! You have completed all the steps for adding monitoring and alerting of your Radix validator node

Additional Sources

Last updated